AI models are being developed and released at an increasing pace. These are targeted at a wide range of use cases ranging from understanding text, generating images, providing remote medical diagnoses and detecting defects in images.

This provides a tremendous opportunity to build AI functionality into front-line applications. But doing so requires the app developer to deal with a number of choices and tasks:

- Which AI tools are the best for the application, and how to maintain flexibility to update or replace it as new options become available?

- How to collect, store, clean, classify and label data to queue up AI training models?

- Where should the model run? On a server? On a mobile device? A hybrid approach?

- How to transform the AI model for use on the chosen hardware? CPU, GPU, or TPU? Servers or in mobile devices?

- How is production data distributed securely? How to integrate the model with production data and the workflow desired by the application user?

Creating and “gluing” these components together can be a time-consuming task that will tend to limit the extent to which AI is incorporated into applications. Being able to react nimbly to the release of the AI capabilities or to extend the use case is also very difficult in a traditional hand assembled manner.

Umajin is an application development platform that lets users efficiently create next-gen front line and mobile applications as well as author extended reality content using collaborative no-code/low-code tools. The platform allows users to easily create applications and experiences that incorporate both 3D visual elements and AI components. These can include using AI elements such as voice recognition or object detection into workflow applications, machine vision applications trained to detect production defects, or digital avatars that provide a more natural user interface for an application.

Umajin has several components that contribute to delivering a flexible pipeline which allow new innovations in AI to be quickly applied in applications.

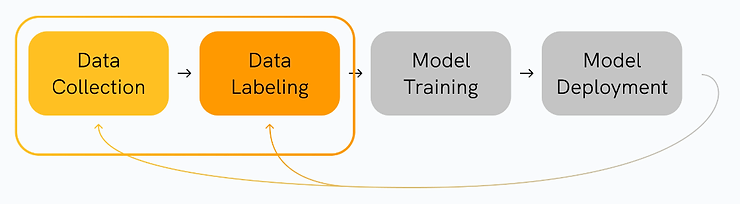

- Umajin Server is a turnkey database and API layer making it easy to build applications and to collect raw data securely, to store, clean, classify and label data, to queue up training models. It stores meta data and manages the corpus of training data (often images which need to be labelled).

- Umajin Server manages the training to create new models, which can then be prepared or transformed for different uses. It can be made to run on a server, server with GPU and server with TPU. These servers are either in the cloud or on premise (what’s known as the edge)

- The models can also be slimmed down and targeted at CPU, GPU and or TPU for mobile devices. When run on mobile devices, it may be valuable to perform some basic event detection on the edge device, and then pass a subset of the image or data to the cloud where a more sophisticated model can be run. For example, the edge smartphone may find cars, then the server may read the number plates.

- Umajin Cloud is a content management system that manages versions of assets and distributes models and data to edge devices. The content can be distributed using several type of networks depending on the use case—from global content distribution networks to point-to-point encrypted connections

- The Umajin Native Runtime on devices (computers, smartphones and embedded devices) allows high performance applications using cameras, lighting, CPU, GPU and TPU to be updated and distributed to the edge

Umajin’s multi-user collaborative visual editor allows AI components to be packaged as components that can be accessed in shared library via a drag and drop interface. This allows a broader range of users to incorporate AI components into front line applications that utilize other tools in the Umajin platform.

For example, Umajin has proprietary specular photometry technology that extracts highly detailed data on the surface characteristics of objects, providing a rich data set that can be used by Umajin’s family of accelerated image processing and machine vision tools, or open source AI frameworks, for identifying things like defects. The identified defects can then be incorporated into a workflow to both rework the defect and to identify the root causes allow process redesign to improve yields.

Why not incorporate AI in every application? We are working to reduce the friction of doing so!