The infrastructure mania is cooling, but a more durable, and profitable, economy is emerging downstream.

For the past three years, the dominant AI investment thesis was predicated on the scarcity of advanced computational resources. The thesis was simple: Buy the pick-and-shovel makers. As long as the hyperscalers, Amazon, Microsoft, Google, and Meta, were locked in an existential arms race to secure compute capacity, companies like Nvidia and Broadcom were a sure bet. The results of this scramble are evident in the equity markets. Since the release of ChatGPT in late 2022, Nvidia’s stock price has increased 12-fold, Broadcom’s has risen 7-fold, and GE Vernova, a key supplier of power generation for these hungry data centers, has quadrupled since its April 2024 IPO. Open AI is not traded publicly but its value is estimated to have increased 17x during the same period. Hardware and foundation models captured a disproportionate share of the industry’s initial value.

The recent volatility surrounding Oracle and the turbulent trading of CoreWeave signals a shift in market psychology. The uncertainty in the market stems from a disconnect between capital expenditure and revenue. Hyperscalers are currently spending over $300 billion annually on infrastructure, yet the annualized revenue from AI-specific software remains a fraction of that. This “Revenue Gap,” estimated by some analysts at over $500 billion, is hanging over the sector. The “bigger is better” scaling laws that justified massive GPU clusters are being reconsidered as Open AI competitors like DeepSeek V3.2 and Google’s Gemini 3 Flash are demonstrating that high-level LLM performance can be achieved at a fraction of the cost. Custom silicon, such as Google’s Trillium TPUs and AWS Trainium 3, is substituting for GPUs in many workloads. The rental price of an Nvidia workhorse H100 GPU has fallen from a peak of $8.00 per hour to under $2.00 in spot markets.

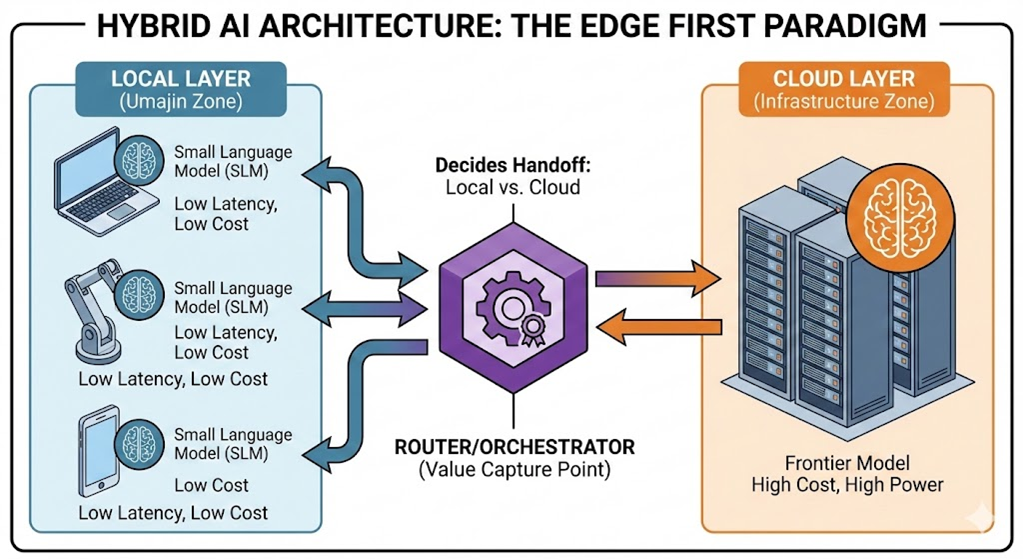

Lower hardware margins and more efficient training and inference algorithms will lead to cheaper intelligence. This deflation is necessary. If advanced AI services cost $10+ per task (an estimate of the cost of complex “reasoning” requests on the newest models), they will be restricted to high-value enterprise applications. To achieve ubiquity, where AI is optimizing everything from factory processes to family schedules, the cost must drop to pennies. This price sensitivity will also drive a migration toward “Hybrid AI Architectures,” where most workloads are processed on lower-cost edge hardware, sending only complex inquiries to large cloud AI models.

The industry is beginning the transition from a phase of capital-intensive model training to the actual use of these models to deliver economic benefit. Sustainability means developing downstream applications that customers are actually willing to pay for, not because they are “AI-powered,” but because they solve expensive problems. Many consumers find value in chatbots today, but most users have free accounts and pay no subscription fee. Monetizing these accounts through increasing the number of paid subscriptions or through advertising is one step in closing the revenue gap. But the biggest opportunity is in downstream business model innovation. The growth of reasoning models, agentic applications, and physical AI enabled by continually improving models, lower token prices, and applications that embed AI in users’ workflow will create immense economic value.

What factors will drive how much of that value is retained by upstream hardware and AI datacenters (“AI factory”) providers, versus application vendors? In the initial AI infrastructure buildout cycle (2022–2025), hardware has been the primary bottleneck and value captor. The capital expenditure required to train and host frontier models means that a small group hardware, AI companies, and hyperscale data center operators will continue to control the “intelligence utility” for frontier models, so long as models continue to advance. Assuming chatbots are effectively monetized and ubiquitous downstream applications emerge, the most efficient hardware providers and “AI factories” will be very profitable, although valuations may slowly deflate.

AI-enabled downstream business models will see huge value inflow, but it will not be evenly distributed. Lower cost generative AI lowers the barrier to writing code, making it easier to build applications. This creates a flood of “wrapper” apps, which are little more than a custom interface to a large language model. Increasing competition will destroy the pricing power downstream for these “thin” applications. If an application’s primary value is “using AI to do X,” and AI is accessible to everyone, the application has no competitive moat.

The “moats” of the future will be built on intellectual property that anticipates technical convergence, proprietary data that enables differentiation, and the agility to orchestrate AI tools within strict governance frameworks. The winners of the next phase will be the “integrators” and “platform” companies that adopt a “System-of-Systems” approach and the creators of new use cases that leverage the convergence of AI with other advancing technologies. In this paradigm, an AI model is not a standalone product but a component within a complex system or workflow. Consider a logistics hub. A simple AI wrapper might write an email about a shipping delay. A true System-of-Systems uses vision AI to detect the delay, updates the ERP, re-routes the truck via IoT, and notifies the driver—all without human intervention. This integrated complexity is harder to replicate than a text-generation model, but its benefits will be transformational for those who do it best. An enterprise that operates at “machine speed” thanks to deep integration of AI throughout its value chain will be as different from today’s enterprise as algorithmic trading is to the Wall Street of the 1980s.

As the market matures, it will segment into verticals—the “AI-Driven Grid Market,” or the “AI-Driven Logistics Market,” with multiple verticals sharing a common converged infrastructure in which AI is integrated with data and sensor networks, payment and security infrastructure, and operations technologies, among others. While hardware providers will remain profitable with reduced margins, “thin” application companies will struggle. The lion’s share of value will be captured by those who possess the IP and the industrial integration to create true agentic processes, serving the 80% of the global workforce on the front lines as well as those behind a desk.

The initial AI infrastructure has been built, and has created a lot of value for a few companies. The real work, and the real value creation, is just beginning.